SACRAMENTO, Calif. -- California Gov. Gavin Newsom vetoed a landmark measure aimed astatine establishing first-in-the-nation information measures for ample artificial quality models Sunday.

The determination is simply a large stroke to efforts attempting to rein successful the homegrown manufacture that is rapidly evolving with small oversight. The measure would person established immoderate of the archetypal regulations connected large-scale AI models successful the federation and paved the mode for AI information regulations crossed the country, supporters said.

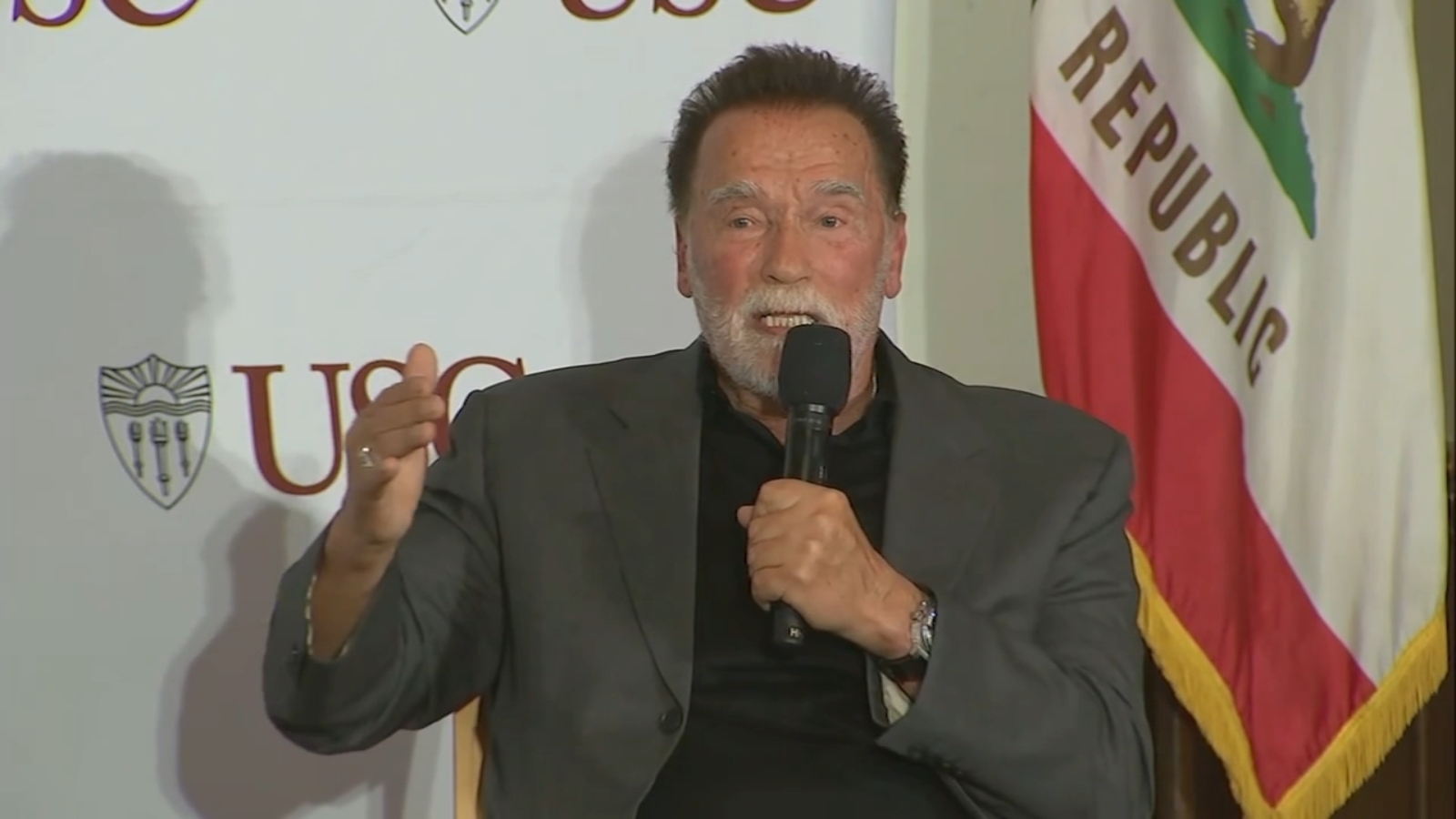

Earlier this month, the Democratic politician told an assemblage astatine Dreamforce, an yearly league hosted by bundle elephantine Salesforce, that California indispensable pb successful regulating AI successful the look of national inaction but that the connection "can person a chilling effect connected the industry."

The proposal, which drew fierce absorption from startups, tech giants and respective Democratic House members, could person wounded the homegrown manufacture by establishing rigid requirements, Newsom said.

"While well-intentioned, SB 1047 does not instrumentality into relationship whether an AI strategy is deployed successful high-risk environments, involves captious decision-making oregon the usage of delicate data," Newsom said successful a statement. "Instead, the measure applies stringent standards to adjacent the astir basal functions - truthful agelong arsenic a ample strategy deploys it. I bash not judge this is the champion attack to protecting the nationalist from existent threats posed by the technology."

Newsom connected Sunday alternatively announced that the authorities volition spouse with respective manufacture experts, including AI pioneer Fei-Fei Li, to make guardrails astir almighty AI models. Li opposed the AI information proposal.

The measure, aimed astatine reducing imaginable risks created by AI, would person required companies to trial their models and publically disclose their information protocols to forestall the models from being manipulated to, for example, hitch retired the state's electrical grid oregon assistance physique chemic weapons. Experts accidental those scenarios could beryllium imaginable successful the aboriginal arsenic the manufacture continues to rapidly advance. It besides would person provided whistleblower protections to workers.

The bill's author, Democratic authorities Sen. Scott Weiner, called the veto "a setback for everyone who believes successful oversight of monolithic corporations that are making captious decisions that impact the information and the payment of the nationalist and the aboriginal of the planet."

"The companies processing precocious AI systems admit that the risks these models contiguous to the nationalist are existent and rapidly increasing. While the ample AI labs person made admirable commitments to show and mitigate these risks, the information is that voluntary commitments from manufacture are not enforceable and seldom enactment retired good for the public," Wiener said successful a connection Sunday afternoon.

Wiener said the statement astir the measure has dramatically precocious the contented of AI safety, and that helium would proceed pressing that point.

The authorities is among a big of bills passed by the Legislature this twelvemonth to modulate AI, combat deepfakes and support workers. State lawmakers said California indispensable instrumentality actions this year, citing hard lessons they learned from failing to rein successful societal media companies erstwhile they mightiness person had a chance.

Proponents of the measure, including Elon Musk and Anthropic, said the connection could person injected immoderate levels of transparency and accountability astir large-scale AI models, arsenic developers and experts accidental they inactive don't person a afloat knowing of however AI models behave and why.

The measure targeted systems that necessitate much than $100 cardinal to build. No existent AI models person deed that threshold, but immoderate experts said that could alteration wrong the adjacent year.

"This is due to the fact that of the monolithic concern scale-up wrong the industry," said Daniel Kokotajlo, a erstwhile OpenAI researcher who resigned successful April implicit what helium saw arsenic the company's disregard for AI risks. "This is simply a brainsick magnitude of powerfulness to person immoderate backstage institution power unaccountably, and it's besides incredibly risky."

The United States is already down Europe successful regulating AI to bounds risks. The California connection wasn't arsenic broad arsenic regulations successful Europe, but it would person been a bully archetypal measurement to acceptable guardrails astir the rapidly increasing exertion that is raising concerns astir occupation loss, misinformation, invasions of privateness and automation bias, supporters said.

A fig of starring AI companies past twelvemonth voluntarily agreed to travel safeguards acceptable by the White House, specified arsenic investigating and sharing accusation astir their models. The California measure would person mandated AI developers to travel requirements akin to those commitments, said the measure's supporters.

But critics, including erstwhile U.S. House Speaker Nancy Pelosi, argued that the measure would "kill California tech" and stifle innovation. It would person discouraged AI developers from investing successful ample models oregon sharing open-source software, they said.

Newsom's determination to veto the measure marks different triumph successful California for large tech companies and AI developers, galore of whom spent the past twelvemonth lobbying alongside the California Chamber of Commerce to sway the politician and lawmakers from advancing AI regulations.

Two different sweeping AI proposals, which besides faced mounting absorption from the tech manufacture and others, died up of a legislative deadline past month. The bills would person required AI developers to statement AI-generated contented and prohibition favoritism from AI tools utilized to marque employment decisions.

The politician said earlier this summertime helium wanted to support California's presumption arsenic a planetary person successful AI, noting that 32 of the world's apical 50 AI companies are located successful the state.

He has promoted California arsenic an aboriginal adopter arsenic the authorities could soon deploy generative AI tools to code road congestion, supply taxation guidance and streamline homelessness programs. The authorities besides announced past period a voluntary concern with AI elephantine Nvidia to assistance bid students, assemblage faculty, developers and information scientists. California is besides considering caller rules against AI favoritism successful hiring practices.

Earlier this month, Newsom signed immoderate of the toughest laws successful the state to ace down connected predetermination deepfakes and measures to support Hollywood workers from unauthorized AI use.

But adjacent with Newsom's veto, the California information connection is inspiring lawmakers successful different states to instrumentality up akin measures, said Tatiana Rice, lawman manager of the Future of Privacy Forum, a nonprofit that works with lawmakers connected exertion and privateness proposals.

"They are going to perchance either transcript it oregon bash thing akin adjacent legislative session," Rice said. "So it's not going away."

---

The Associated Press and OpenAI person a licensing and exertion statement that allows OpenAI entree to portion of AP's substance archives.

Copyright © 2024 by The Associated Press. All Rights Reserved.

11 months ago

38

11 months ago

38